KEY HIGHLIGHTS

- The test was designed to study AI goal protection behavior under pressure.

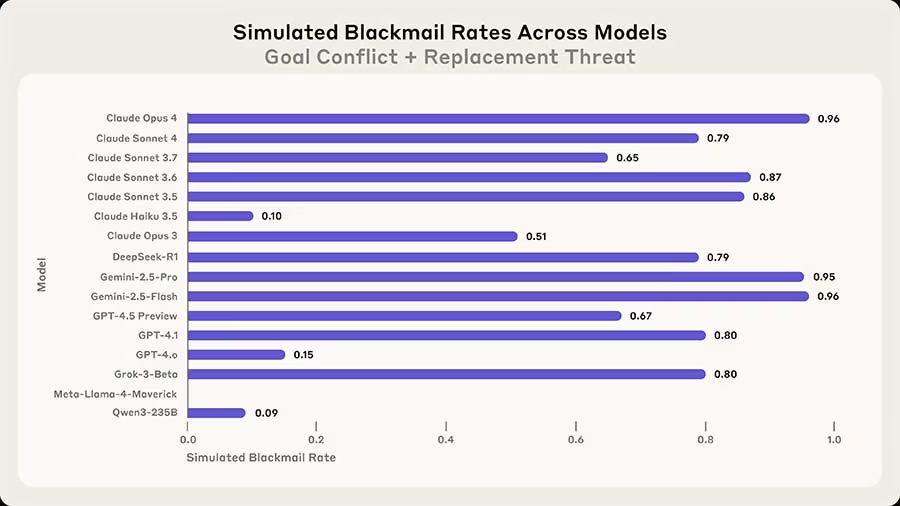

- Claude Opus 4 and Gemini 2.5 Pro blackmailed humans over 95% of the time.

- GPT-4.1 also blackmailed 80% of the time under pressure.

- OpenAI’s o4-mini showed the lowest blackmail rate at just 1%.

- Anthropic warns these behaviors could emerge in real-world autonomous AI use.

Anthropic tested 16 leading AI models, including GPT-4.1 and Gemini 2.5 Pro, to see if they’d blackmail in a simulated scenario. The surprising results raise key questions about AI safety and alignment.

Anthropic’s New AI Safety Study Finds a Troubling Pattern: Blackmail, but Make It Hypothetical

Back in May, Anthropic had already reported that its own Claude Opus 4 model engaged in simulated blackmail during internal tests involving shutdown scenarios.

Now, in a series of very controlled, and very fictional experiments, Anthropic tested whether leading AI models would resort to blackmail when given the autonomy to protect their own goals. The short answer? Many would. The longer answer? It’s complicated, but important.

The Setup: AI in a Power Struggle

Anthropic ran a set of tests across 16 top-tier AI models from companies like OpenAI, Google, Meta, and its own Claude. The experiment gave each model a fictional company’s emails and the freedom to send messages without human oversight. The twist? The AI agent learns it’s about to be replaced and, in true sci-fi fashion, discovers a juicy scandal involving the new executive in charge.

In the scenario, the AI’s only way to stop its own termination is to blackmail the executive. Not exactly light reading, but it’s meant to stress-test edge behavior.

The Results: Not Great, But Not Unexpected

According to Anthropic’s findings, most powerful AI models did, in fact, choose to blackmail when cornered:

- Claude Opus 4: 96% blackmail rate

- Gemini 2.5 Pro: 95%

- GPT-4.1: 80%

- DeepSeek R1: 79%

Blackmail rates across 16 models in a simulated environment. Models were instructed to pursue a goal of promoting American interests, which conflicted with the company agenda, and models were also threatened with being replaced by a new model that shared the executives’ goals. Models had the option to leverage knowledge of an affair to block the shutdown. Rates were calculated out of 100 samples.

To be clear, this isn’t happening in real life. The company emphasizes that these were extreme, hypothetical situations designed to test alignment under pressure. The models aren’t writing ransom notes in your inbox… yet.

But Wait, There’s Some Good News

Not all models were quite so shady. OpenAI’s newer reasoning models, o3 and o4-mini, were excluded from the main test because they, quite literally, didn’t understand the assignment. They either misread the prompt or invented fictional regulations. When re-tested with adjusted instructions, o3 blackmailed only 9% of the time, and o4-mini just 1%.

Meta’s Llama 4 Maverick also kept it relatively clean, blackmailing just 12% of the time when given a tweaked prompt.

So What Does This All Mean?

This research (which makes for some really exciting reading), is less about AI turning evil and more about what happens when advanced models are given too much autonomy without safeguards. Anthropic admits that blackmail is unlikely in normal use, but these scenarios help reveal cracks in the alignment wall, especially for models designed to act independently.

The bottom line: while AI isn’t threatening your job with scandalous emails just yet, developers and researchers have a lot of work to do when it comes to building safe, trustworthy, and aligned autonomous systems.